With all the hype surrounding Pokémon Go for me the question came up: how does a company like Niantic handle data with geographic information. In fact processing spatial data is not an easy task because databases are highly optimized when it comes to one dimensional data but usually do not offer a high performance when it comes to higher dimensions.

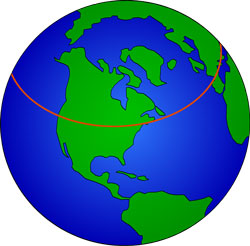

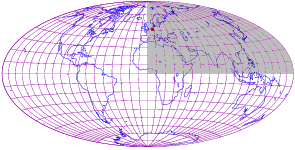

To illustrate this let's look at the following example. For each point of interest (which could be a single Pokémon), we have its longitude and latitude encoding its exact location. Given that our database supports secondary indices it would be a good idea to create one for the longitude and one for the latitude. Given an index we can efficiently make a query like: give me all Pokémon with a latitude between 43°39'02"N and 43°39'05"N. We can efficiently find all entries covered by this query but they will likely be many. While this is a quite narrow corridor (about 100 meters wide) it still goes around the earth and covers an area of more than 200.000 square kilometers:

Therefore it will contain lots of entries. Adding bounds on the longitude will not help us with the underlying problem because the intermediate result still needs to be generated before filtering for the longitude bounds. And at large scale this is guaranteed to put the database under too much pressure. The problem here is that the database can only handle one dimension of the query at a time.

For a game like Pokémon Go queries to get all entries in a certain area / close to a certain position are an absolute necessity and the underlying database must be able to serve them efficiently. Furthermore we will need those queries to be served by a distributed architecture.

R-Trees

A very successful data structure for spatial access (in a k-dimensional space - not just 2) are R-Trees. The idea here is to arrange all coordinates as leaves in a balanced search tree where the nodes hold bounding boxes over all coordinates of the leaves below. Given a low intersection of the bounding boxes one can quickly dive through only the relevant nodes down to all required leaves.

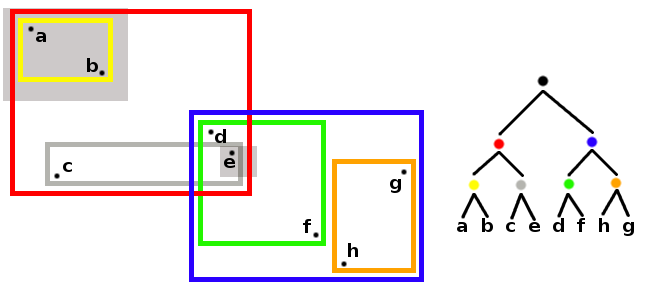

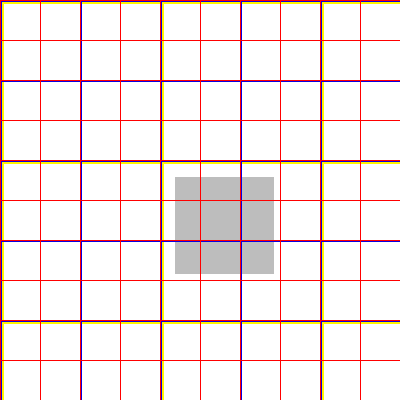

For example take eight points organized in an R-Tree as follows:

If we look for all points in the gray area covering a and b we can dive trough to those leaves via the red and yellow bounding boxes. Intersections of the bounding boxes can lead to redundant lookups. For example searching for all points in the gray area covering e will require looking into the red, gray, blue and green bounding boxes.

While queries in an R-Tree are straight forward, insertions are more complicated. When inserting new coordinates, the tree should stay balanced (which means that one cannot necessarily just insert the leave below a bounding box already covering this point) and the intersection of the bounding boxes should be kept low (which means that one should not just take any node with few leaves). In practice there exist good heuristics for choosing the right insertion node and for rebalancing the tree. While these often have good practical performance they usually do not provide performance guarantees (which should be kept in mind - there is always a chance of an attacker constructing requests such that the worst case performance is reached).

The major drawback with R-Trees is that there are only few database management systems actually supporting them. And those which do are often not designed to be run on distributed hardware (even though it is possible to build a distributed R-Tree).

Geohashes

The practical drawbacks of R-Trees lead us to another technique called Geohashes. These seem to be very common in implementations of databases with spatial query support. This is for a good reason as Geohashes play well with the one dimensional query optimizations of common databases and do not require the implementation of a different search tree.

The idea of Geohashes is to turn coordinates into a single string. Each string represents a certain bounding box with longer strings referring to smaller boxes. Furthermore we want that a strings prefix represents a bounding box containing the box of the original string. Let's construct our very own Geohash with these properties.

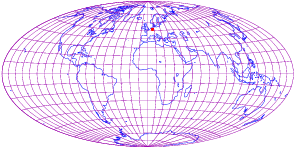

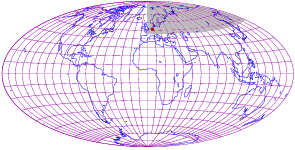

We start with a certain position (50°06'40"N 8°40'53"E) which we want to encode :

We will use the first bit of hash to distinguish between the northern and southern hemisphere. 0 shall represent north, leading to Geohash "0":

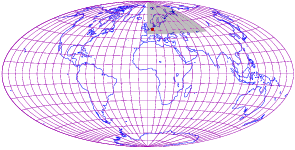

The next bit will be used to distinguish between the western and eastern longitudes. 0 will represent west. Our Geohash now is "01":

We keep alternating back to latitudes. Again we will half the possible range of angles. 0 will represent the half further north (45°N - 90°N). The new Geohash is "010":

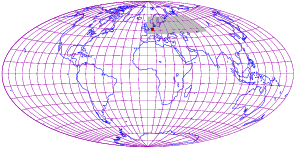

Back to longitudes. The more western half will get the digit 0. As our position is in the range 0°E - 90°E we get the Geohash "0100":

And another digit for the latitudes, narrowing down the range to 45°N till 67°30'N leading to the Geohash "01001":

Each additional bit will increase the accuracy of the Geohash. If we continue along this schema for the first 16 bits we get the Geohash "0100101010010100" which represents the area in 49°55'16.75"N - 50°37'30"N and 8°26'15"E - 9°50'37.5"E. This is still an area of roughly 7500 square kilometers. But each additional bit in the Geohash will cut this roughly in half - which will quickly get the area in question down to very precise levels. Note that the Geohash can also be represented in a more convenient form than binary. Our 16 bit Geohash in hexadecimal representation becomes "4A94".

Of course Geohashes can only represent positions up to a certain precision. But as spatial information in practice comes with a certain amount of inaccuracy this should not be too much of a problem (a 128 bit Geohash can give you accurate locations on the subatomic level).

Coming back to our original range queries. Using the Geohashes we were able to bring down the multidimensional positional information down to one dimensional entries. For each datum we can save the Geohash as an indexed column. The property of growing precision now helps us to efficiently query for all elements in certain areas. Each range query over a certain Geohash prefix directly corresponds to a certain area on the map.

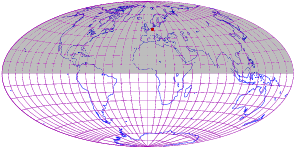

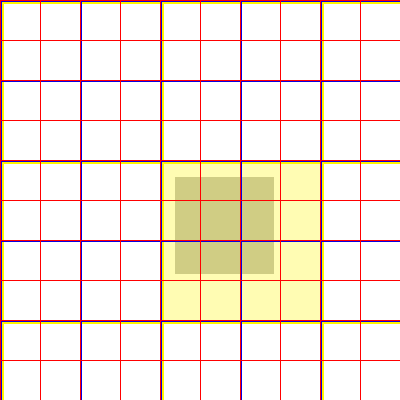

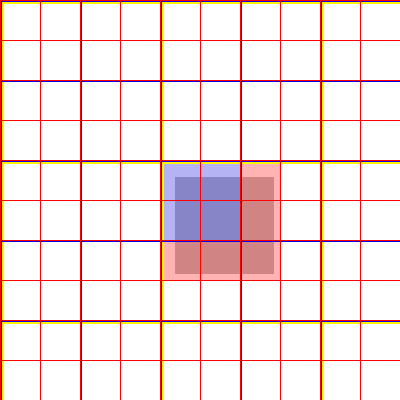

Assume we want to find all elements in the gray area:

One possibility is to formulate a single range query which will return all elements in the yellow square (using the prefix corresponding to this square). These can then be filtered for the elements which are exactly within the gray bounds.

Alternatively one can perform multiple finer grained queries. In the example one could alternatively query for the blue as well as the five red areas. In this case we need to perform more queries but get less elements which we need to filter afterwards.

The ideal query strategy here is a matter of fine tuning. In both cases the queries are well tailored towards the way that most databases work and we will get a much better performance than naively querying for a latitude / longitude rectangle. Furthermore we can rely on the already implemented sharding techniques if the database is distributed.

The Geohashing algorithm I presented is only a simple one. It can be improved to exhibit more desirable properties. In our case the squares are visited in a Z shape but using Hilbert curves instead will result in more neighboring squares having neighboring Geohash prefixes (see this blog post). Furthermore in our case Geohashes which are closer to the poles correspond to smaller areas than those close to the equator (an issue which in theory should also be resolvable). Finally note that the Geohash system is not restricted to spherical coordinates but can be extended to a k-dimensional bounded space (where k should not be too large).

Conclusion

It seems that for practical purposes Geohashes are a very useful tool for encoding spatial information in databases. But they also have limitations, especially if we want to encode shapes instead of points. More about problems arising from big spatial data in this blog post by Andrew Rogers.

Luckily many databases support some form of spatial encoding out of the box or using some plugin. Hopefully this blog post brought some light into the inner workings of these systems and can help you when evaluating appropriate database systems for geodata.

Resources and helpful links

- Blog post about the difficulties associated with geo data

- Blog post about Geohashes and Hilbert curves

- Original R-Trees paper

- Wikipedia article about Geohashes

- Wikipedia article about R-Trees

- Wikipedia list of databases with out of the box spatial processing

Header photo by NASA

comments powered by Disqus